Cluster access is to take place exclusively via login nodes and only by ssh.

The current list of accessible login nodes can be found here.

Details on first login and how to enable ssh-key-based login

Do not confuse the login nodes with the HPC cluster!

To use the cluster, it is not sufficient to simply start your program on a login node.

The login nodes are not intended for “productive” or long-running calculations!

Host Keys

The ssh protocol allows not only user verification and authentication, but also cryptographic host verification. This is to prevent manipulated or malicious hosts to present themselves as the one you intended to reach.

Each machine with an ssh server identifies itself for your verification with a host key, which you should check at first connection. Your ssh client will present the host key fingerprint you are currently connecting to, and you are asked for acceptance by typing “no” or “yes”.

You should only answer “yes” after you have compared the host key's fingerprint with the one listed below – then you can trust this machine to really be one of our login nodes:

|

ED25519 (preferred, but other key types are also allowed – the once popular RSA Keys are not accepted anymore – see below for more details) |

Public Key | AAAAC3NzaC1lZDI1NTE5AAAAILTlBfX7g8HAbMy7x7vSS66HO6QtEItNByqtSkRUZauo |

| Fingerprint | SHA256:sCfHqKOHUK45d7XaHyWN/N8cTUd8Nh6o6T1ngNhbQa8 |

Based on security recommendations of the german BSI (TR-02102-1), ssh keys based on RSA are no longer permitted on the Lichtenberg cluster.

Instead, please use the recommended “ed25519” algorithm.

Due to the login nodes facing the public (and sometimes evil) internet, we have to install (security) updates from time to time. This will happen on short notice (30 minutes). Thus, don't expect all login nodes to be available 24h/7d.

Use Cases

Used by all users of the HPC, the login nodes are intended to be used only for

- job preparation and submission

- copying data in and out of the cluster (with scp, sftp or rsync via ssh)

- short test runs of your program (≤ 30 minutes)

- debugging your software

- job status checking

While test-driving your software on a login node, check its current CPU load with “top” or “uptime”, and reduce your impact by using less cores/threads/processes or at least by using “nice”.

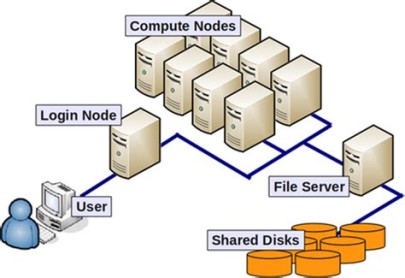

From a login node, your productive calculations need to be submitted as batch jobs into the queue (usually with “sbatch”). For that, you need to specify your required resources per job (e.g. amount of main memory, number of nodes (and tasks), maximum runtime).

Batch System Slurm

The arbitration, dispatching and processing of all user jobs on the cluster is organized with the Slurm batch system. Slurm calculates when and where a given job will be started, considering all jobs' resource requirements, workload of the system, waiting times of the job and the priority of the associated project.

When eligible to be run, the user jobs are dispatched to one (or more) compute nodes and started there by Slurm.

The batch system expects a batch script for each job (array), which contains

- the resource requirements of the job in form of

#SBATCH …pragmas and - the actual commands and programs you want to be run in your job.

The batch script is a plain text file (with UNIX line feeds!) and can be created on your local PC and then be transferred to a login node. In Windows, use “Notepad++” and switch to UNIX (LF) in “Edit” – “Line feed format” before saving the script.

Or you can create it with UNIX editors on the login node itself and avoid the fuss with improper line feeds.

Further information:

- Commands of batch systems, and examples of batch jobs

- Queues and computing resources

- File systems

- FAQ about the batch scheduler and job scripts